- "Optimizing links for maximum payload throughput" is exam speak for compression.

- If files are already compressed or in a compressed format, it is recommended to not use compression.

TCP Header Compression

- Is a mechanism that compresses the TCP header in a data packet before the packet is transmitted.

- Configured with ip tcp header-compression

- STAC Compression

- The lossless data compression mechanism is STAC, using the LZF algorithm.

- Configured under the interface with "compress stac".

Predictor

- Uses the RAND compression algorithm.

- Configured using "compress predictor" along with PPP encapsulation.

RTP Header Compression

- Allows the reduction of the RTP header to be reduced from 40 bytes to 2-5 bytes.

- It's best used on slow speed links for real time traffic with small data payloads, like VOIP.

- To configure on serial link use "ip rtp header-compression"

- To enable per VC, use the command

frame-relay map ip {IP} {DLCI} [broadcast] rtp header-compression

- The 'passive' keyword, means the router will not send RTP compressed headers unless RTP headers was received.

sh ip tcp header-compression - Shows header compression statistics

sh frame-relay map - Shows the configured header compression per DCLI

interface se0/0

compress stac - Configures lossless data compression mechanism

interface se1/0

encap ppp - Required for predictor

compress predictor - Enables the RAND algorithm compression

ip tcp header-compression - Enables TCP header compression

ip rtp header-compression [passive] [periodic-refresh]

- Enables RTP header compression

- [passive] Compress for destinations sending compressed RTP headers

- [periodic-refresh]: Send periodic refresh packets

note: Only one side of the link uses the passive keyword. If both sides are set to be passive, cRTP does not occur because neither side of the link ever sends compressed headers.

interface s0/1.1

frame-relay map ip {ip} {dlci} rtp header-compression [connections] [passive] [periodic-refresh]

- Enables RTP header compression per VC

- [connections] Max number of compressed RTP connections (DEF=256)

- [passive] Compress for destinations sending compressed RTP headers

- [periodic-refresh]: Send periodic refresh packets

frame-relay ip tcp header-compression [passive]: Enables TCP header compression on a Frame Relay interface

Multilink PPP

To reduce the latency experienced by a large packet exiting an interface (that is, serialization delay), Multilink PPP (MLP) can be used in a PPP environment, and FRF.12 can be used in a VoIP over Frame Relay environment. First, consider MLP. Multilink PPP, by default, fragments traffic. This characteristic can be leveraged for QoS purposes, and MLP can be run even over a single link. The MLP configuration is performed under a virtual multilink interface, and then one or more physical interfaces can be assigned to the multilink group. The physical interface does not have an IP address assigned. Instead, the virtual multilink interface has an IP address assigned. For QoS purposes, a single interface is typically assigned as the sole member of the multilink group. Following is the syntax to configure MLP:

1. interface multilink [multilink_interface_number]: Creates a virtual multilink interface

2. ip address ip_address subnet_mask: Assigns an IP address to the virtual multilink interface

3. ppp multilink: Configures fragmentation on the multilink interface

4. ppp multilink interleave: Shuffles the fragments

5. ppp fragment-delay [serialization_delay]: Specifies how long it takes for a fragment to exit the interface

6. encapsulation ppp: Enables PPP encapsulation on the physical interface

7. no ip address: Removes the IP address from the physical interface

8. Associates the physical interface with the multilink group

ex: to achieve serialization delay of 10ms

R1(config)# interface multilink 1

R1(config-if)# ip address 10.1.1.1 255.255.255.0

R1(config-if)# ppp multilink

R1(config-if)# ppp multilink interleave

R1(config-if)# ppp fragment-delay 10

R1(config-if)# exit

R1(config)# interface serial 0/0

R1(config-if)# encapsulation ppp

R1(config-if)# no ip address

R1(config-if)# multilink-group 1

R2(config)# interface multilink 1

R2(config-if)# ip address 10.1.1.2 255.255.255.0

R2(config-if)# ppp multilink

R2(config-if)# ppp multilink interleave

R2(config-if)# ppp fragment-delay 10

R2(config-if)# exit

R2(config)# interface serial 0/0

R2(config-if)# encapsulation ppp

R2(config-if)# no ip address

R2(config-if)# multilink-group 1

LFI can also be performed on a Frame Relay link using FRF.12. The configuration for FRF.12 is based on an FRTS configuration. Only one additional command is given, in map-class configuration mode, to enable FRF.12. The syntax for that command

is as follows:

Router(config-map-class)#frame-relay fragment fragment-size: Specifies the size of the fragments

As a rule of thumb, the packet size should be set to the line speed divided by 800. For example, if the line speed is 64 kbps, the fragment size can be calculated as follows:

fragment size = 64,000 / 800 = 80 bytes

This rule of thumb specifies a fragment size (80 bytes) that creates a serialization delay of 10 ms.

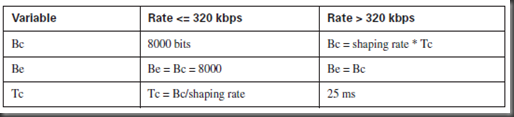

The following example shows an FRF.12 configuration to create a serialization delay of 10 ms on a link clocked at a rate of 64 kbps. Because FRF.12 is configured as a part of FRTS, CIR and Bc values are also specified

R1(config)# map-class frame-relay FRF12-EXAMPLE

R1(config-map-class)# frame-relay cir 64000

R1(config-map-class)# frame-relay bc 640

R1(config-map-class)# frame-relay fragment 80

R1(config-map-class)# exit

R1(config)# interface serial 0/1

R1(config-if)# frame-relay traffic-shaping

R1(config-if)# interface serial 0/1.1 point-to-point

R1(config-subif)# frame-relay interface-dlci 101

R1(config-fr-dlci)# class FRF12-EXAMPLE